Past & Current Projects:

My research involves developing and testing visualization, display and interaction methods in the context of image-guided surgery. I'm particularly interested in how we can improve the spatial and depth understanding of volume rendered medical data and studying the impact of augmented reality visualization for particular surgical tasks.

Augmented Reality for Neurovascular Surgery (current)

In neurovascular surgery, and in particular surgery for arteriovenous malformations (AVMs), the surgeon must map pre-operative images of the patient to the patient on operating room (OR) table in order to understand the topology and locations of vessels below the visible surface. This type of spatial mapping is not trivial, is time consuming, and may be prone to error. Using augmented reality (AR) we can register the microscope/camera image to pre-operative patient data in order to aid the surgeon in understanding the topology, the location and type of vessel lying below the surface of the patient. This may reduce surgical time and increasing surgical precision.

In this project as well as studying a mixed reality environment for neuromuscular surgery, we will examine and evaluate which visualization techniques provide the best spatial and depth understanding of the vessels beyond the visible surface.

For more information click here AR

Cerebral Vascular Visualization (current)

Surgeons make use of cerebral vascular images obtained through angiography for diagnosis, surgical planning and guidance for stenosis, aneurysms and arteriovenous malformations (AVMs). However, cerebral vascular images are often difficult to understand due to the complexity of vessels and furcations which overlap at different depths. An improvement of depth perception of cerebral vasculature, therefore, may help diagnosis, surgical planning, and intra-operative guidance.

In this project, we focus on developing new visualization techniques and performing empirical studies that consider the effect of different perceptual cues (stereopsis, fog, chromadepth, and depicting edges) on depth perception of cerebral vascular volumes.

MIR

While working at GRIS at the University of Tuebingen in 2005/06, I developed software for medical image registration. In particular, the focus of my work is the registration of three dimensional rotational angiography (3DRA) to CT or MRI. Image registration is the process of transforming different sets of data into one coordinate system. Once this is done it is possible to compare or integrate data obtained using different data, for example data obtained using multiple acquisition techniques (i.e. MRI/CT/X-ray). Image registration in the medical domain can be used for finding the transformation between a patient's 'real anatomy' and a model (in computer guided surgery), for comparing a patient's anatomy over time (i.e. progress of a brain tumour), for comparing a patient's anatomy to an atlas, for getting information from different imaging modalities aligned, etc.

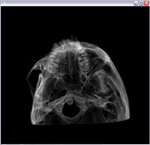

Stereo Volume Rendering

In 2005 I completed my Masters thesis in the Medical Computing Lab at Queen's University under the supervision of Dr. James Stewart and Dr. Randy Ellis. My thesis work examined adding perceptual cues to images from the X-ray domain.

Recent advances in commodity graphics hardware provide new capabilities in the study of stereoscopic volume rendering. In the medical community, volume rendering is used to create 3D anatomical models for diagnostic purposes, surgical planning, and surgical guidance. A digitally reconstructed radiograph (DRR) illumination model can be used to simulate X–ray images. Unlike surface–rendered medical images, however, a volume–rendered DRR lacks depth information.

In the thesis work, I studied the use of stereopsis and aerial perspective as depth cues in the human perception of volume–rendered images. Two experiments and one preliminary study were conducted to evaluate the effectiveness of stereopsis and simulated aerial perspective on the depth perception of DRRs. The results of these experiments suggest that both stereopsis and simulated aerial perspective can improve relative depth perception in purely absorptive media. These results provide new ways to visualize complex volumetric data and to explore the capabilities of the human visual system.

Mobile Emergency Triage

I have been working on the MET system under the supervision of Dr. Wojtek Michalowski from the Univeristy of Ottawa. The aim of the MET research program is to facilitate the rapid triage of children with varying acute conditions by means of a mobile clinical support system. This system allows ER medical personnel to quickly assess a patient as needing a specialist consult, as being resolved, or as requiring furthur investigations.

MET is a multiplatform system that will run on the web, a desktop computer or a mobile device. The mobile device can be carried by a user and thus does not restrict the location where or time when MET can be consulted. Apart from the portability that facilitates triage by emergency personnel at a patient's bedside, MET has the capability of wireless transfer of a patient's information and allows electronic data capture.

Motion View

For my undergraduate thesis I worked together with Jennifer Lyon on the stand alone package Motion View. The purpose of Motion View is to help in the visualization of complex bone rotations. Even the most experienced orthopedic surgeons have difficulty visualizing the outcome of a series of rotations. Motion View is a tool used to help visualize Chasles' Theorem, i.e. to view a number of rotations and translations in the form of one resulting screw movement.